Data Querying From ReductStore Database

ReductStore is a time series database that provides efficient data retrieval capabilities. This guide explains how to query data from ReductStore using the CLI, HTTP API, and SDKs.

Concepts

ReductStore provides an efficient data retrieval solution by batching multiple records within a specified time interval into a single HTTP request, which is beneficial for managing large volumes of data as it reduces the number of requests and overall delay.

The query process is designed as an iterator, returning a batch of records in each iteration. This method allows data to be processed in segments, an approach that is useful when managing large datasets.

While it is possible to retrieve a record by its timestamp, this method is less optimal than querying by a time range due to the lack of batching. However, this approach can be useful for querying specific versions of non-time series records, such as AI models, configurations, or file versions, when timestamps are used as identifiers.

Query Parameters

Data can be queried using the ReductStore CLI, SDKs or HTTP API. The query parameters are the same for all interfaces and are divided into two categories: filter and control parameters.

Filter Parameters

Filter parameters are used to select records based on specific criteria. You can combine multiple filter parameters to create complex queries. This is the list of filter parameters, sorted by priority:

| Parameter | Description | Type | Default |

|---|---|---|---|

start | Start time of the query | Timestamp | The timestamp of the first record in the entry |

stop | Stop time of the query | Timestamp | The timestamp of the last record in the entry |

when | Conditional query | JSON-like object | No condition |

Time Range

The time range is defined by the start and stop parameters.

Records with a timestamp equal to or greater than start and less than stop are included in the result.

If the start parameter is not set, the query starts from the begging of the entry.

If the stop parameter is not set, the query continues to the end of the entry.

If both start and stop are not set, the query returns the entire entry.

Control Parameters

There are also more advanced parameters available in the SDKs and HTTP API to control the query behavior:

| Parameter | Description | Type | Default |

|---|---|---|---|

ttl | Time-to-live of the query. The query is automatically closed after TTL | Integer | 60 |

head | Retrieve only metadata | Boolean | False |

continuous | Keep the query open for continuous data retrieval | Boolean | False |

poll_interval | Time interval in seconds for polling data in continuous mode data in continuous mode | Integer | 1 |

strict | Enable strict mode for conditional queries | Boolean | False |

TTL (Time-to-Live)

The ttl parameter determines the time-to-live of a query. The query is automatically closed when the specified time has elapsed since it was created. This prevents memory leaks by limiting the number of open queries. The default TTL is 60 seconds.

Head Flag

The head flag is used to retrieve only metadata. When set to true, the query returns the records' metadata, excluding the actual data. This parameter is useful when you want to work with labels without downloading the content of the records.

Continuous Mode

The continuous flag is used to keep the query open for continuous data retrieval. This mode is useful when you need to monitor data in real-time. The SDKs provide poll_interval parameter to specify the time interval for polling data in continuous mode. The default interval is 1 second.

Strict Mode

The strict flag is used to enable strict mode for conditional queries in the where parameter. In strict mode, the query fails if the condition is invalid or contains an unknown field.

When the strict mode is disabled, the invalid condition is considered as false and the unknown field is ignored.

Typical Data Querying Cases

This section provides guidance on implementing typical data querying cases using the ReductStore CLI, SDKs, or HTTP API. All examples are designed for a local ReductStore instance, accessible at http://127.0.0.1:8383 using the API token 'my-token'.

For more information on setting up a local ReductStore instance, see the Getting Started guide.

Querying Data by Time Range

The most common use case is to query data within a specific time range:

- Python

- JavaScript

- Go

- Rust

- C++

- CLI

- Web Console

- cURL

import time

import asyncio

from reduct import Client, Bucket

async def main():

# Create a client instance, then get or create a bucket

async with Client("http://127.0.0.1:8383", api_token="my-token") as client:

bucket: Bucket = await client.create_bucket("my-bucket", exist_ok=True)

ts = time.time()

await bucket.write(

"py-example",

b"Some binary data",

ts,

)

# Query records in the "py-example" entry of the bucket

async for record in bucket.query("py-example", start=ts, stop=ts + 1):

# Print meta information

print(f"Timestamp: {record.timestamp}")

print(f"Content Length: {record.size}")

print(f"Content Type: {record.content_type}")

print(f"Labels: {record.labels}")

# Read the record content

content = await record.read_all()

assert content == b"Some binary data"

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

import { Client } from "reduct-js";

import assert from "node:assert";

// Create a client instance, then get or create a bucket

const client = new Client("http://127.0.0.1:8383", { apiToken: "my-token" });

const bucket = await client.getOrCreateBucket("bucket");

// Send a record to the "js-example" entry with the current timestamp in microseconds

const timestamp = BigInt(Date.now()) * 1000n;

let record = await bucket.beginWrite("js-example", timestamp);

await record.write("Some binary data");

// Query records in the "js-example" entry of the bucket

for await (let record of bucket.query(

"js-example",

timestamp,

timestamp + 1000n,

)) {

// Print meta information

console.log(`Timestamp: ${record.time}`);

console.log(`Content Length: ${record.size}`);

console.log(`Content Type: ${record.contentType}`);

console.log(`Labels: ${JSON.stringify(record.labels)}`);

// Read the record content

let content = await record.read();

assert(content.toString() === "Some binary data");

}

package main

import (

"context"

reduct "github.com/reductstore/reduct-go"

"time"

)

func main() {

// Create a client and use the base URL and API token

client := reduct.NewClient("http://localhost:8383", reduct.ClientOptions{

APIToken: "my-token",

})

// Get or create a bucket with the name "my-bucket"

bucket, err := client.CreateOrGetBucket(context.Background(), "my-bucket", nil)

if err != nil {

panic(err)

}

// Send a record to the "go-example" entry with the current timestamp

ts := time.Now().UnixMicro()

err = bucket.BeginWrite(context.Background(), "go-example", &reduct.WriteOptions{

Timestamp: ts,

ContentType: "application/octet-stream",

}).Write([]byte("Some binary data"))

if err != nil {

panic(err)

}

// Query records in the "go-example" entry of the bucket

queryRequest, err := bucket.Query(context.Background(), "go-example", &reduct.QueryOptions{

Start: ts,

Stop: ts + 1,

})

if err != nil {

panic(err)

}

for record := range queryRequest.Records() {

// Print meta information

println("Timestamp:", record.Time())

println("Content Length:", record.Size())

println("Content Type:", record.ContentType())

println("Labels:", record.Labels())

// Read the record content

content, err := record.ReadAsString()

if err != nil {

panic(err)

}

if string(content) != "Some binary data" {

panic("Content mismatch")

}

}

}

use std::time::{Duration, SystemTime};

use bytes::Bytes;

use futures::StreamExt;

use reduct_rs::{ReductClient, ReductError};

use tokio;

#[tokio::main]

async fn main() -> Result<(), ReductError> {

// Create a client instance, then get or create a bucket

let client = ReductClient::builder()

.url("http://127.0.0.1:8383")

.api_token("my-token")

.build();

let bucket = client.create_bucket("test").exist_ok(true).send().await?;

// Send a record to the "rs-example" entry with the current timestamp

let timestamp = SystemTime::now();

bucket

.write_record("rs-example")

.timestamp(timestamp)

.data("Some binary data")

.send()

.await?;

// Query records in the time range

let query = bucket

.query("rs-example")

.start(timestamp)

.stop(timestamp + Duration::from_secs(1))

.send()

.await?;

tokio::pin!(query);

while let Some(record) = query.next().await {

let record = record?;

println!("Timestamp: {:?}", record.timestamp());

println!("Content Length: {}", record.content_length());

println!("Content Type: {}", record.content_type());

println!("Labels: {:?}", record.labels());

// Read the record data

let data = record.bytes().await?;

assert_eq!(data, Bytes::from("Some binary data"));

}

Ok(())

}

#include <reduct/client.h>

#include <iostream>

#include <cassert>

using reduct::IBucket;

using reduct::IClient;

using reduct::Error;

using std::chrono_literals::operator ""s;

int main() {

// Create a client instance, then get or create a bucket

auto client = IClient::Build("http://127.0.0.1:8383", {.api_token="my-token"});

auto [bucket, create_err] = client->GetOrCreateBucket("my-bucket");

assert(create_err == Error::kOk);

// Send a record with labels and content type

IBucket::Time ts = IBucket::Time::clock::now();

auto err = bucket->Write("cpp-example", ts,[](auto rec) {

rec->WriteAll("Some binary data");

});

assert(err == Error::kOk);

// Query records in a time range

err = bucket->Query("cpp-example", ts , ts+1s, {}, [](auto rec) {

// Print metadata

std::cout << "Timestamp: " << rec.timestamp.time_since_epoch().count() << std::endl;

std::cout << "Content Length: " << rec.size << std::endl;

std::cout << "Content Type: " << rec.content_type << std::endl;

std::cout << "Labels: " ;

for (auto& [key, value] : rec.labels) {

std::cout << key << ": " << value << ", ";

}

std::cout << std::endl;

// Read the content

auto [content, read_err] = rec.ReadAll();

assert(read_err == Error::kOk);

std::cout << "Content: " << content << std::endl;

assert(content == "Some binary data");

return true; // if false, the query will stop

});

assert(err == Error::kOk);

return 0;

}

reduct-cli alias add local -L http://localhost:8383 -t "my-token"

# Query data for a specific time range and export it to a local directory

reduct-cli cp local/example-bucket ./export --start "2021-01-01T00:00:00Z" --stop "2021-01-02T00:00:00Z"

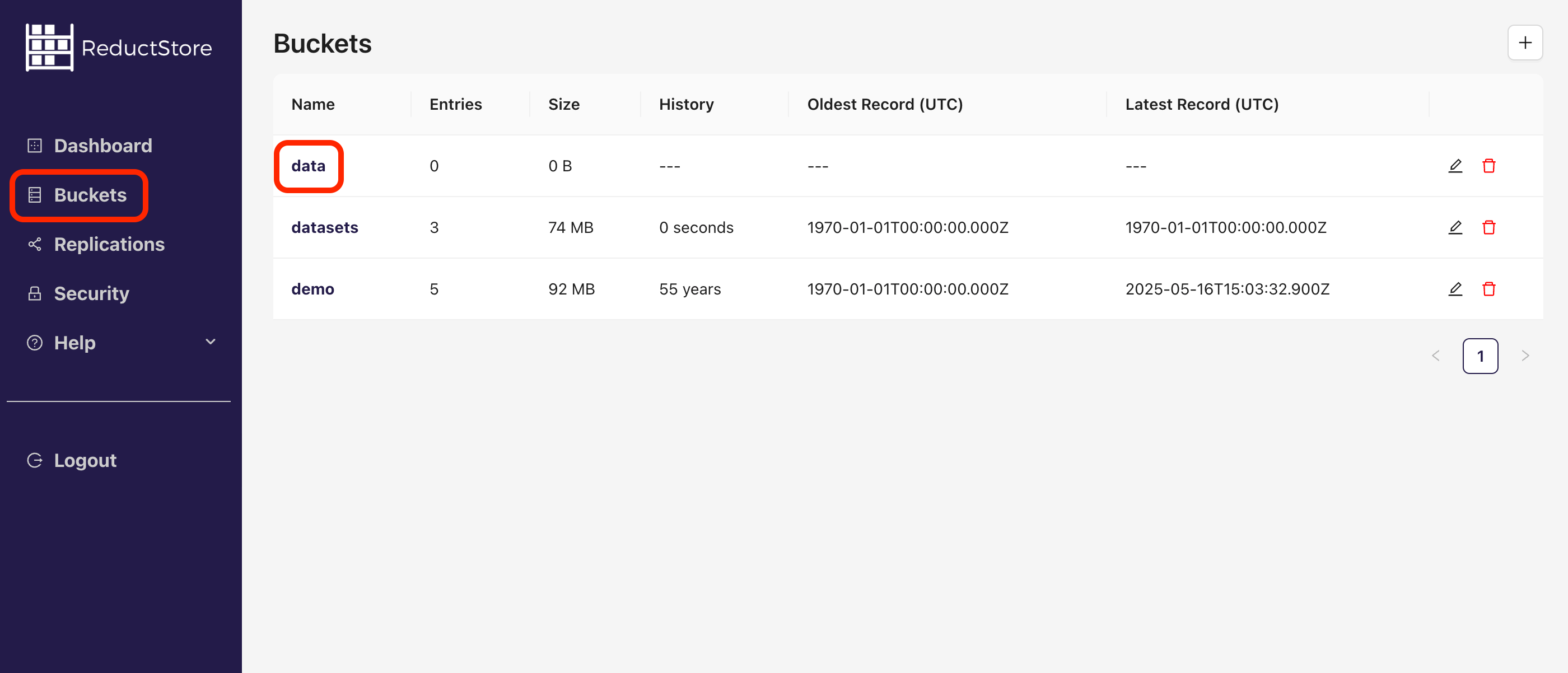

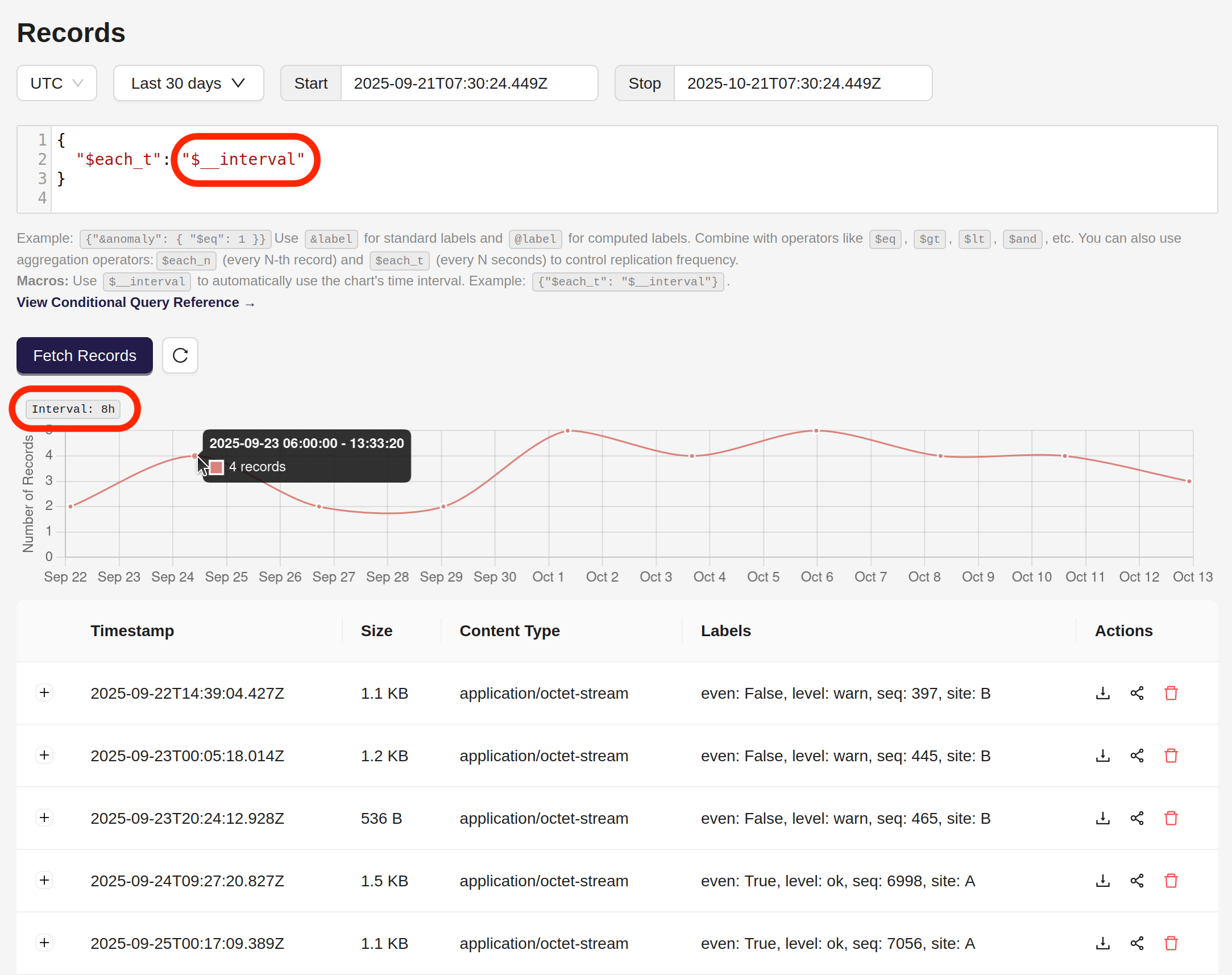

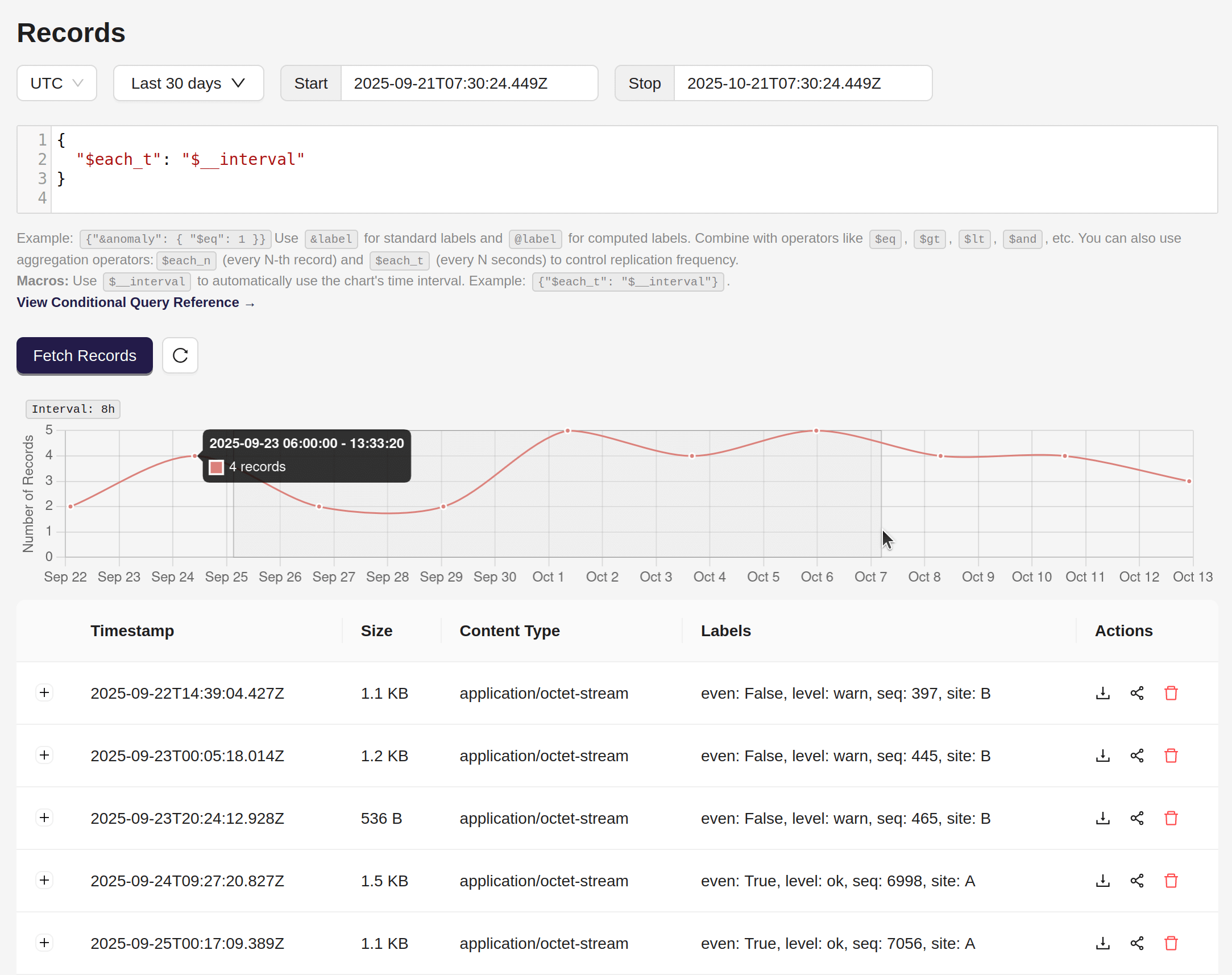

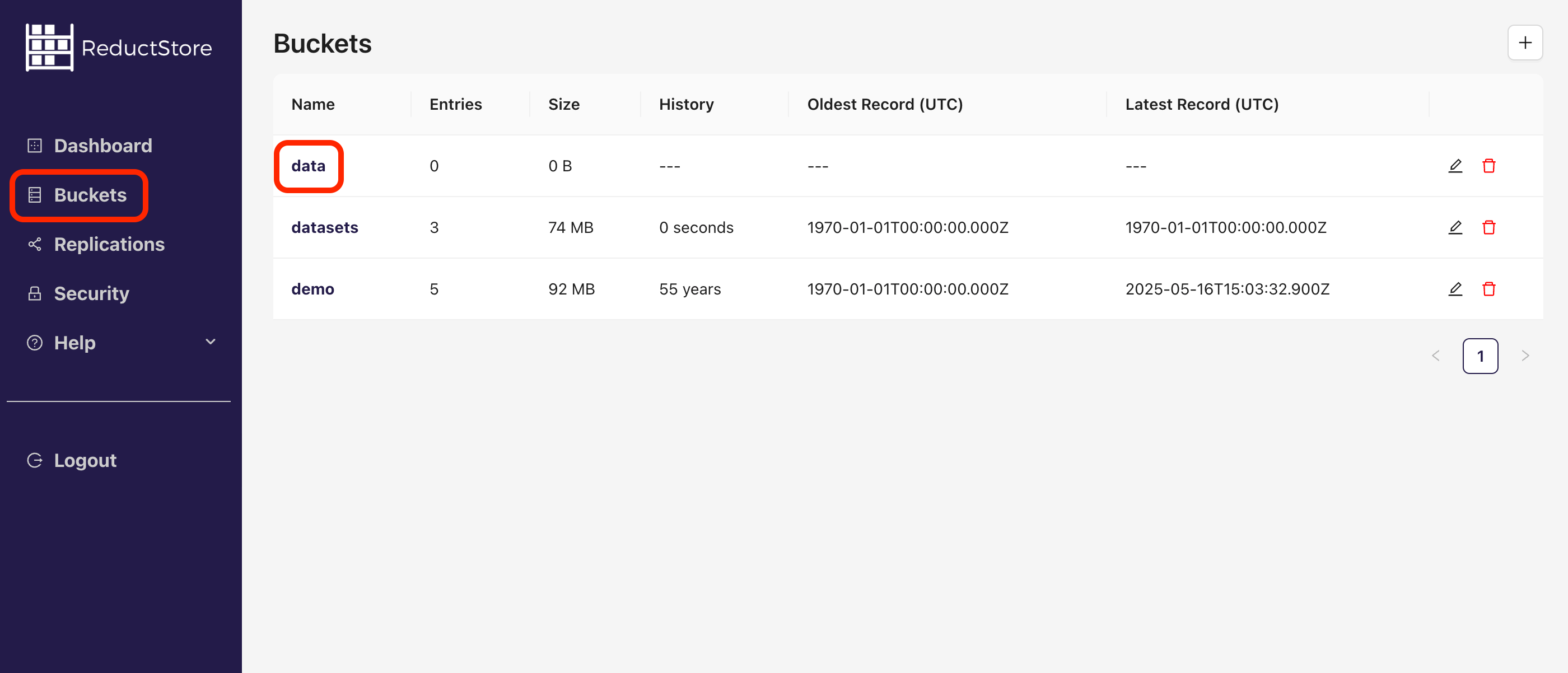

- Open the Web Console at

http://127.0.0.1:8383in your browser. - Enter the API token if the authorization is enabled.

- Select the bucket that contains the data you want to query:

- You will see a list of all the entries in the bucket

- Click on the entry you want to query

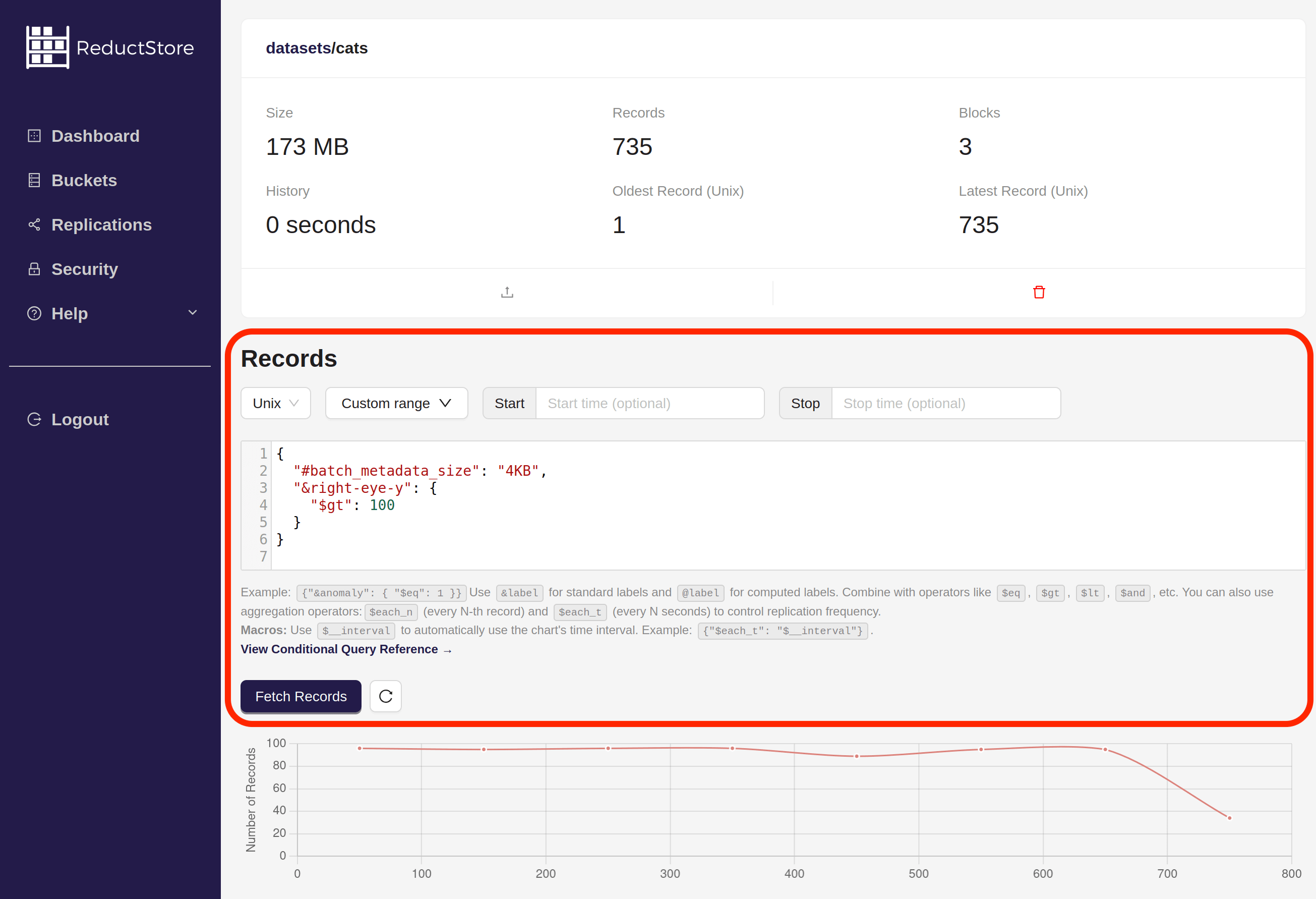

- On the entry page you will see the query panel:

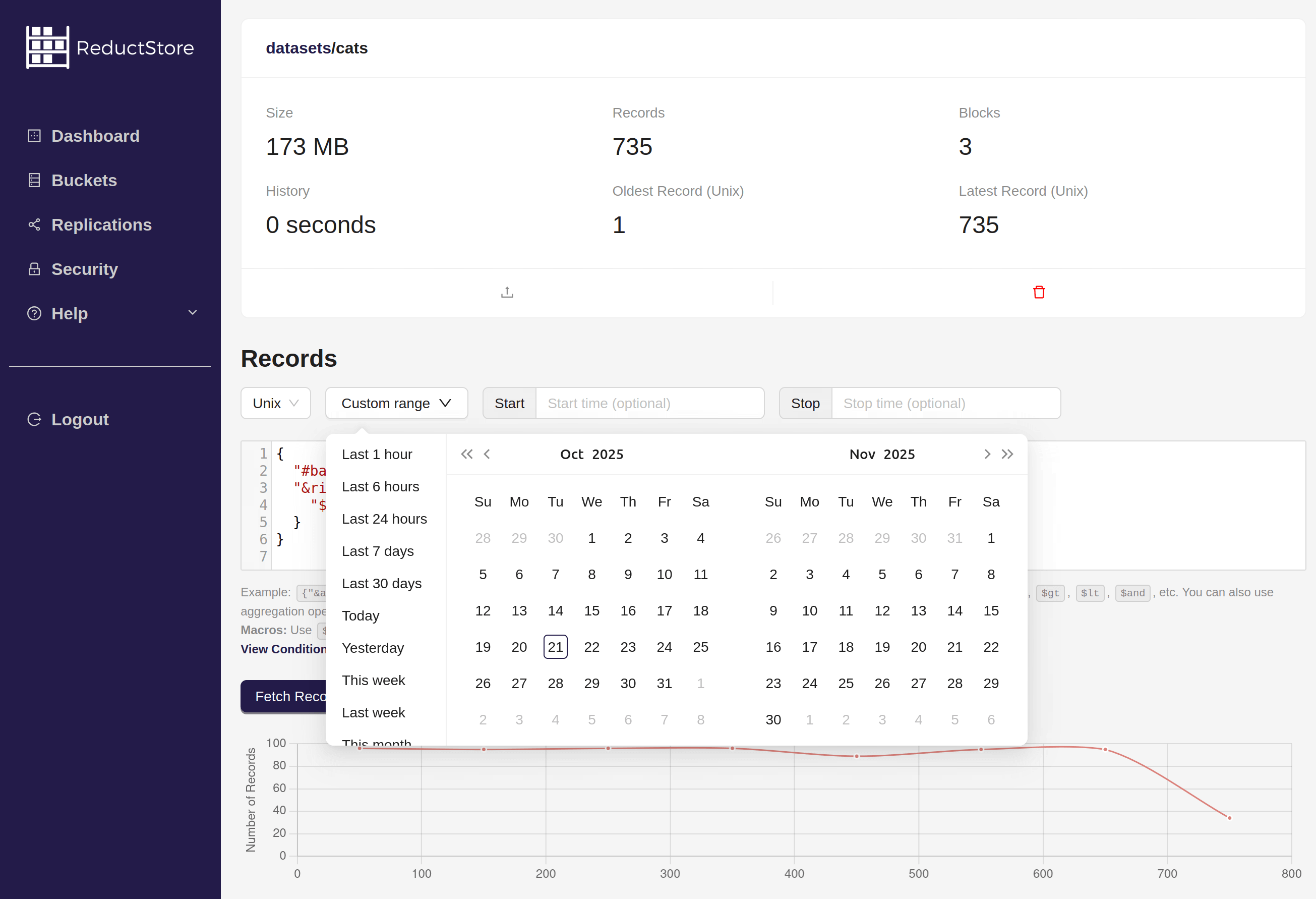

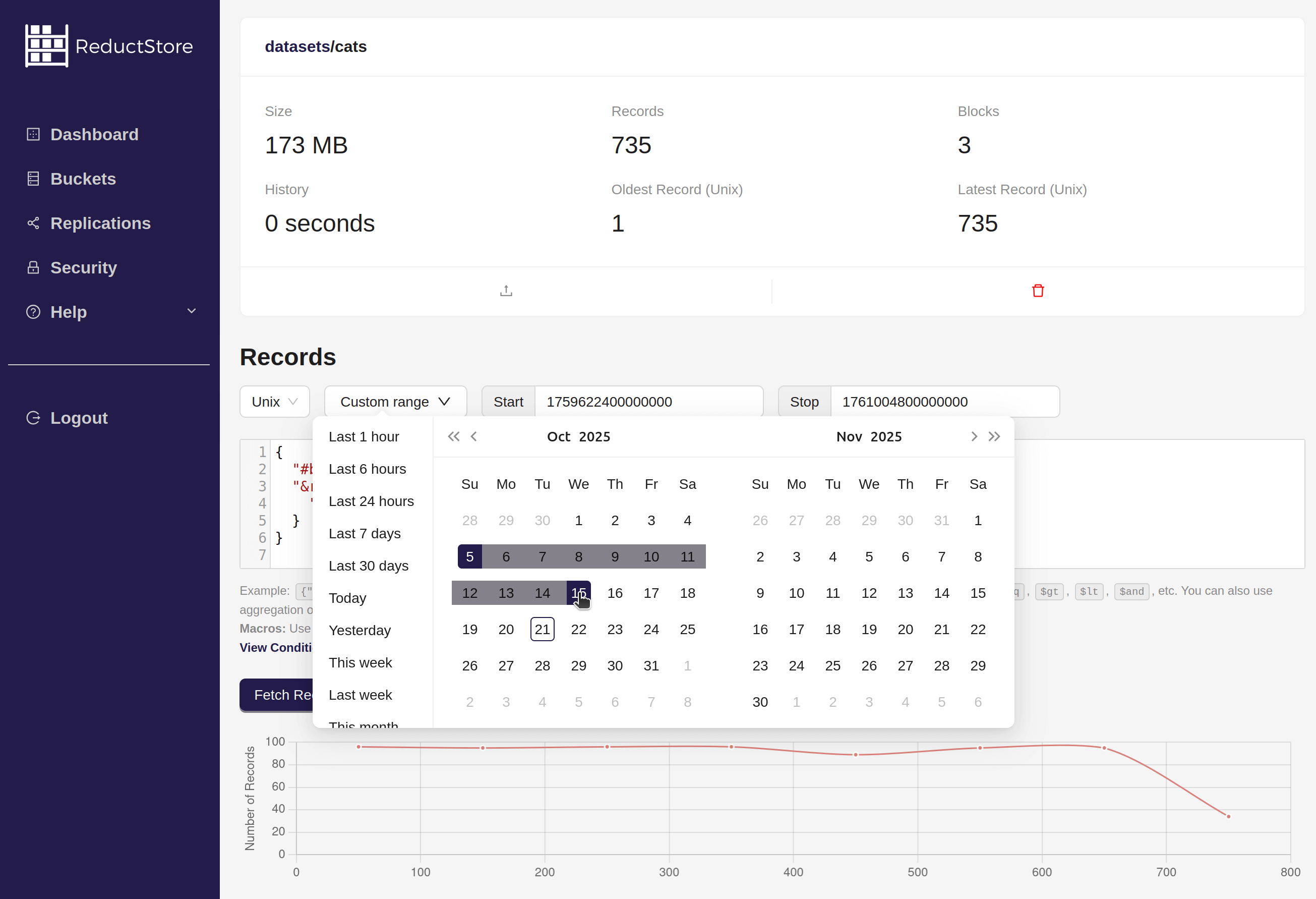

- Enter the time range in the date picker or manually in the input fields

- You can also use the Time Preset dropdown to select a predefined time range, such as "Last 24 hours" or "Last 7 days". The time range will be automatically filled in the input fields.

- Or you can select a time range using the Customize option.

- Click the Fetch Records button to execute the query

- You will see the meta information about the records that match the time range. You can click on the download icon to download content of the record.

#!/bin/bash

set -e -x

API_PATH="http://127.0.0.1:8383/api/v1"

AUTH_HEADER="Authorization: Bearer my-token"

# Write a record to bucket "example-bucket" and entry "entry_1"

TIME=`date +%s000000`

curl -d "Some binary data" \

-H "${AUTH_HEADER}" \

-X POST -a ${API_PATH}/b/example-bucket/entry_1?ts=${TIME}

# Query data for a specific time range

STOP_TIME=`date +%s000000`

ID=`curl -H "${AUTH_HEADER}" \

-d '{"query_type": "QUERY", "start": '${TIME}', "stop": '${STOP_TIME}'}' \

-X POST -a "${API_PATH}/b/example-bucket/entry_1/q" | jq -r ".id"`

# Fetch the data (without batching)

curl -H "${AUTH_HEADER}" -X GET -a "${API_PATH}/b/example-bucket/entry_1?q=${ID}"

curl -H "${AUTH_HEADER}" -X GET -a "${API_PATH}/b/example-bucket/entry_1?q=${ID}"

Querying Record by Timestamp

The simplest way to query a record by its timestamp is to use the read method provided by the ReductStore SDKs or HTTP API:

- Python

- JavaScript

- Go

- Rust

- C++

- Web Console

- cURL

import time

import asyncio

from reduct import Client, Bucket

async def main():

# Create a client instance, then get or create a bucket

async with Client("http://127.0.0.1:8383", api_token="my-token") as client:

bucket: Bucket = await client.create_bucket("my-bucket", exist_ok=True)

ts = time.time()

await bucket.write(

"py-example",

b"Some binary data",

ts,

)

# Read the record in the "py-example" entry of the bucket

async with bucket.read("py-example", ts) as record:

# Print meta information

print(f"Timestamp: {record.timestamp}")

print(f"Content Length: {record.size}")

print(f"Content Type: {record.content_type}")

print(f"Labels: {record.labels}")

# Read the record content

content = await record.read_all()

assert content == b"Some binary data"

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

import { Client } from "reduct-js";

import assert from "node:assert";

// Create a client instance, then get or create a bucket

const client = new Client("http://127.0.0.1:8383", { apiToken: "my-token" });

const bucket = await client.getOrCreateBucket("bucket");

// Send a record to the "js-example" entry with the current timestamp in microseconds

const timestamp = BigInt(Date.now()) * 1000n;

let record = await bucket.beginWrite("js-example", timestamp);

await record.write("Some binary data");

// Read the record in the "js-example" entry of the bucket

record = await bucket.beginRead("js-example", timestamp);

// Print meta information

console.log(`Timestamp: ${record.time}`);

console.log(`Content Length: ${record.size}`);

console.log(`Content Type: ${record.contentType}`);

console.log(`Labels: ${JSON.stringify(record.labels)}`);

// Read the record content

let content = await record.read();

assert(content.toString() === "Some binary data");

package main

import (

"context"

reduct "github.com/reductstore/reduct-go"

"time"

)

func main() {

// Create a client and use the base URL and API token

client := reduct.NewClient("http://localhost:8383", reduct.ClientOptions{

APIToken: "my-token",

})

// Get or create a bucket with the name "my-bucket"

bucket, err := client.CreateOrGetBucket(context.Background(), "my-bucket", nil)

if err != nil {

panic(err)

}

// Send a record to the "go-example" entry with the current timestamp

ts := time.Now().UnixMicro()

err = bucket.BeginWrite(context.Background(), "go-example", &reduct.WriteOptions{

Timestamp: ts,

ContentType: "application/octet-stream",

}).Write([]byte("Some binary data"))

if err != nil {

panic(err)

}

// Read the record in the "go-example" entry of the bucket

record, err := bucket.BeginRead(context.Background(), "go-example", &ts)

if err != nil {

panic(err)

}

// Print meta information

println("Timestamp:", record.Time())

println("Content Length:", record.Size())

println("Content Type:", record.ContentType())

println("Labels:", record.Labels())

// Read the record content

content, err := record.ReadAsString()

if err != nil {

panic(err)

}

if string(content) != "Some binary data" {

panic("Content mismatch")

}

}

use std::time::SystemTime;

use bytes::Bytes;

use reduct_rs::{ReductClient, ReductError};

use tokio;

#[tokio::main]

async fn main() -> Result<(), ReductError> {

// Create a client instance, then get or create a bucket

let client = ReductClient::builder()

.url("http://127.0.0.1:8383")

.api_token("my-token")

.build();

let bucket = client.create_bucket("test").exist_ok(true).send().await?;

// Send a record to the "rs-example" entry with the current timestamp

let timestamp = SystemTime::now();

bucket

.write_record("rs-example")

.timestamp(timestamp)

.data("Some binary data")

.send()

.await?;

// Read the record by timestamp

let record = bucket

.read_record("rs-example")

.timestamp(timestamp)

.send()

.await?;

println!("Timestamp: {:?}", record.timestamp());

println!("Content Length: {}", record.content_length());

println!("Content Type: {}", record.content_type());

println!("Labels: {:?}", record.labels());

// Read the record data

let data = record.bytes().await?;

assert_eq!(data, Bytes::from("Some binary data"));

Ok(())

}

#include <reduct/client.h>

#include <iostream>

#include <cassert>

using reduct::IBucket;

using reduct::IClient;

using reduct::Error;

using std::chrono_literals::operator ""s;

int main() {

// Create a client instance, then get or create a bucket

auto client = IClient::Build("http://127.0.0.1:8383", {.api_token="my-token"});

auto [bucket, create_err] = client->GetOrCreateBucket("my-bucket");

assert(create_err == Error::kOk);

// Send a record with labels and content type

IBucket::Time ts = IBucket::Time::clock::now();

auto err = bucket->Write("cpp-example", ts,[](auto rec) {

rec->WriteAll("Some binary data");

});

assert(err == Error::kOk);

// Read the record by timestamp

err = bucket->Read("cpp-example", ts, [](auto rec) {

// Print metadata

std::cout << "Timestamp: " << rec.timestamp.time_since_epoch().count() << std::endl;

std::cout << "Content Length: " << rec.size << std::endl;

std::cout << "Content Type: " << rec.content_type << std::endl;

std::cout << "Labels: " ;

for (auto& [key, value] : rec.labels) {

std::cout << key << ": " << value << ", ";

}

std::cout << std::endl;

// Read the content

auto [content, read_err] = rec.ReadAll();

assert(read_err == Error::kOk);

std::cout << "Content: " << content << std::endl;

assert(content == "Some binary data");

return true; // if false, the query will stop

});

assert(err == Error::kOk);

return 0;

}

- To find a specific timestamp, you can first browse the records using the

$__intervalmacro in the Web Console. The macro automatically adjusts the $each_t operator based on the zoom level of the graph.

- You can zoom in the graph to select a specific time range by clicking and dragging the mouse over the graph area:

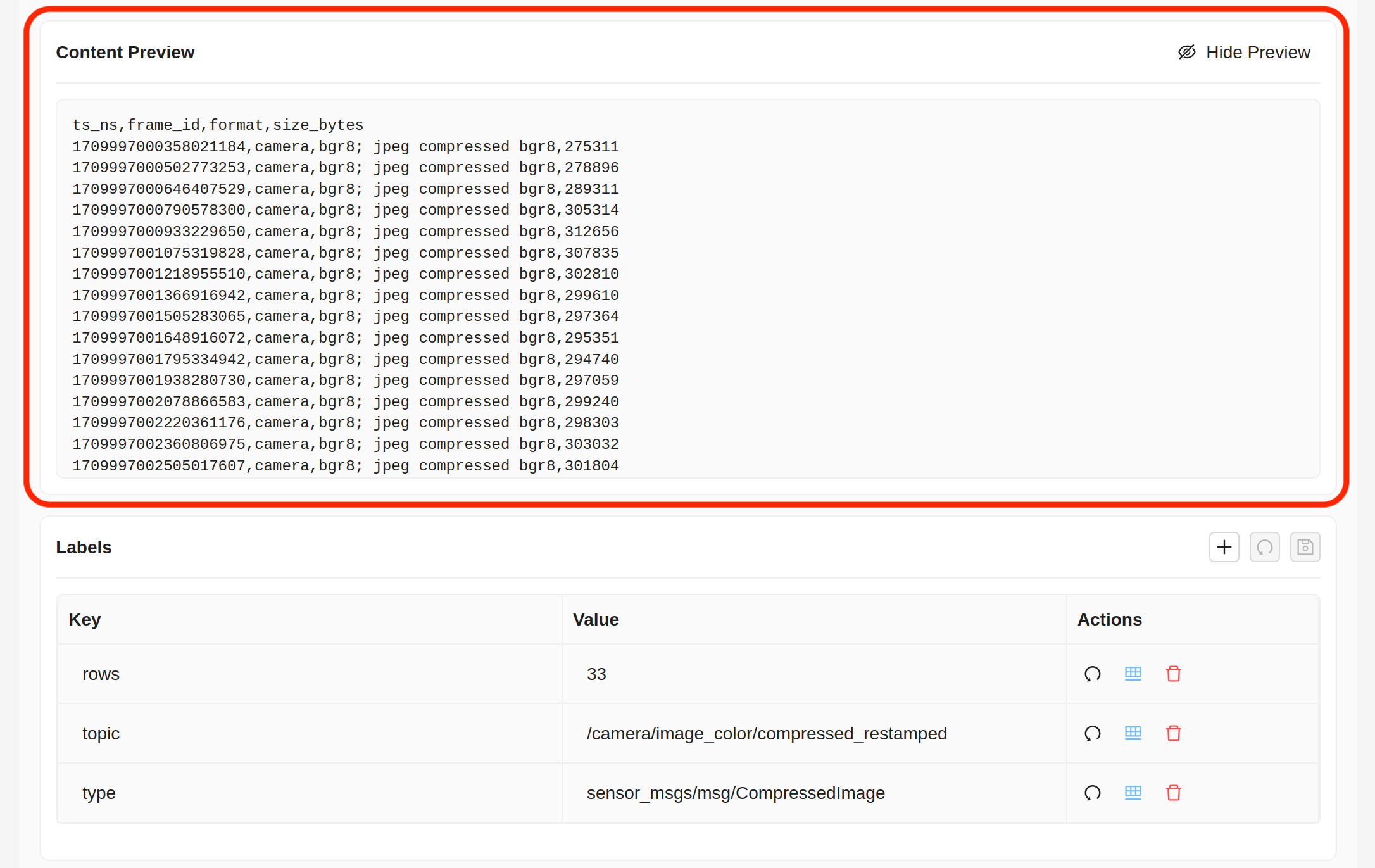

- On the record list below the graph, click on the record with the desired timestamp to preview its content:

#!/bin/bash

set -e -x

API_PATH="http://127.0.0.1:8383/api/v1"

AUTH_HEADER="Authorization: Bearer my-token"

# Write a record to bucket "example-bucket" and entry "entry_1"

TIME=`date +%s000000`

curl -d "Some binary data" \

-H "${AUTH_HEADER}" \

-X POST -a ${API_PATH}/b/example-bucket/entry_1?ts=${TIME}

# Fetch the record by timestamp

curl -H "${AUTH_HEADER}" -X GET -a "${API_PATH}/b/example-bucket/entry_1?ts=${TIME}"

Starting with version 1.18, you can also query records across multiple entries by providing the 'entry' parameter with a list of entry names or a wildcard pattern.

Using Labels to Filter Data

Filtering data by labels is another common use case. You can use the when parameter to filter records based on labels.

ReductStore supports a wide range of operators for conditional queries, including equality, comparison, and logical operators. Refer to the Conditional Query Reference for more information.

For example, consider a data set with annotated photos of celebrities. We want to retrieve the first 10 photos of celebrities taken after 2006 with a score less than 4:

- Python

- JavaScript

- Go

- Rust

- C++

- CLI

- Web Console

- cURL

import time

import asyncio

from reduct import Client, Bucket

async def main():

# Create a client instance, then get or create a bucket

async with Client("http://127.0.0.1:8383", api_token="my-token") as client:

bucket: Bucket = await client.get_bucket("example-bucket")

# Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

async for record in bucket.query(

"imdb",

when={

"&photo_taken": {"$gt": 2006},

"&face_score": {"$lt": 4},

"$limit": 10,

},

):

print("Name", record.labels["name"])

print("Photo taken", record.labels["photo_taken"])

print("Face score", record.labels["face_score"])

_jpeg = await record.read_all()

loop = asyncio.get_event_loop()

loop.run_until_complete(main())

import { Client } from "reduct-js";

// Create a client instance, then get or create a bucket

const client = new Client("http://127.0.0.1:8383", { apiToken: "my-token" });

const bucket = await client.getBucket("example-bucket");

// Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

for await (const record of bucket.query("imdb", undefined, undefined, {

when: {

"&photo_taken": { $gt: 2006 },

"&face_score": { $lt: 4 },

$limit: 10,

},

})) {

console.log("Name", record.labels.name);

console.log("Photo taken", record.labels.photo_taken);

console.log("Face score", record.labels.face_score);

await record.readAsString();

}

package main

import (

"context"

reduct "github.com/reductstore/reduct-go"

)

func main() {

// Create a client and use the base URL and API token

client := reduct.NewClient("http://localhost:8383", reduct.ClientOptions{

APIToken: "my-token",

})

// Get a bucket with the name "example-bucket"

bucket, err := client.GetBucket(context.Background(), "example-bucket")

if err != nil {

panic(err)

}

// Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

queryOptions := reduct.NewQueryOptionsBuilder().

WithWhen(map[string]any{

"&photo_taken": map[string]any{"$gt": 2006},

"&face_score": map[string]any{"$lt": 4},

"$limit": 10,

}).

Build()

query, err := bucket.Query(context.Background(), "imdb", &queryOptions)

if err != nil {

panic(err)

}

for record := range query.Records() {

println("Name:", record.Labels()["name"].(string))

println("Photo taken:", record.Labels()["photo_taken"].(string))

println("Face score:", record.Labels()["face_score"].(string))

}

}

use futures::StreamExt;

use reduct_rs::{condition, ReductClient, ReductError};

use tokio;

#[tokio::main]

async fn main() -> Result<(), ReductError> {

// Create a client instance, then get or create a bucket

let client = ReductClient::builder()

.url("http://127.0.0.1:8383")

.api_token("my-token")

.build();

let bucket = client

.create_bucket("example-bucket")

.exist_ok(true)

.send()

.await?;

// Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

let query = bucket

.query("imdb")

.when(condition!({

"&photo_taken": {"$gt": 2006},

"&face_score": {"$lt": 4},

"$limit": 10

}))

.send()

.await?;

tokio::pin!(query);

while let Some(record) = query.next().await {

let record = record?;

println!("Name: {:?}", record.labels().get("name"));

println!("Photo Taken: {:?}", record.labels().get("photo_taken"));

println!("Face Score: {:?}", record.labels().get("face_score"));

_ = record.bytes().await?;

}

Ok(())

}

#include <reduct/client.h>

#include <iostream>

#include <cassert>

using reduct::IBucket;

using reduct::IClient;

using reduct::Error;

using std::chrono_literals::operator ""s;

int main() {

// Create a client instance, then get or create a bucket

auto client = IClient::Build("http://127.0.0.1:8383", {.api_token="my-token"});

auto [bucket, create_err] = client->GetOrCreateBucket("example-bucket");

assert(create_err == Error::kOk);

// Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

auto err = bucket->Query("imdb", std::nullopt, std::nullopt, {

.when=R"({

"&photo_taken": {"$gt": 2006},

"&face_score": {"$lt": 4},

"$limit": 10

})",

}, [](auto rec) {

std::cout << "Name: " << rec.labels["name"] << std::endl;

std::cout << "Photo Taken: " << rec.labels["photo_taken"] << std::endl;

std::cout << "Face Score: " << rec.labels["face_score"] << std::endl;

auto [_, read_err] = rec.ReadAll();

assert(read_err == Error::kOk);

return true; // if false, the query will stop

});

assert(err == Error::kOk);

return 0;

}

reduct-cli alias add local -L http://localhost:8383 -t "my-token"

# Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

reduct-cli cp local/example-bucket ./export --when='{"&photo_taken": {"$gt": 2006}, "&face_score": {"$lt": 4}}' --limit 10 --with-meta

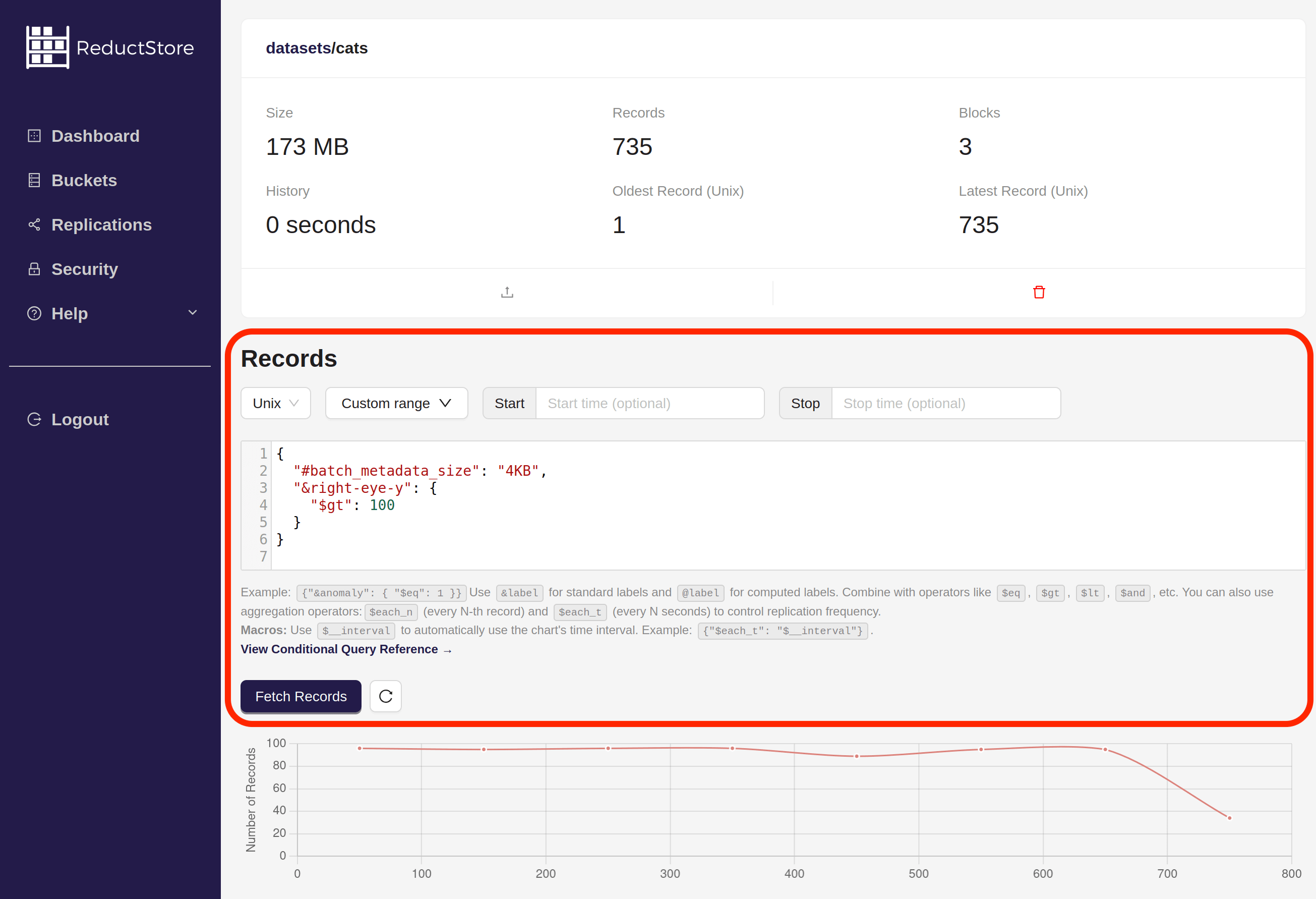

- Open the Web Console at

http://127.0.0.1:8383in your browser. - Enter the API token if the authorization is enabled.

- Select the bucket that contains the data you want to query:

- You will see a list of all the entries in the bucket

- Click on the entry you want to query

- On the entry page you will see the query panel:

- Enter the query condition in the "Filter Records (JSON)" input field

- Click the Fetch Records button to execute the query

- You will see the meta information about the records that match the condition. You can click on the download icon to download content of the record.

#!/bin/bash

set -e -x

API_PATH="http://127.0.0.1:8383/api/v1"

AUTH_HEADER="Authorization: Bearer my-token"

# // Query 10 photos from "imdb" entry which taken after 2006 with the face score less than 4

ID=`curl -H "${AUTH_HEADER}" \

-d '{

"query_type": "QUERY",

"limit": 10,

"when": {

"&photo_taken": {"$gt": 2006},

"&face_score": {"$lt": 4}

}' -X POST -a "${API_PATH}/b/example-bucket/imdb/q" | jq -r ".id"`

# Fetch the data (without batching) until the end

curl -H "${AUTH_HEADER}" -X GET -a "${API_PATH}/b/example-bucket/imdb?q=${ID}" --output ./phot_1.jpeg

curl -H "${AUTH_HEADER}" -X GET -a "${API_PATH}/b/example-bucket/imdb?q=${ID}" --output ./phot_2.jpeg

The when condition filters data in the time range defined by the start and stop parameters. If the start and stop parameters are not set, the condition is applied to all records in the entry.

Limit your queries to a specific time range to improve performance.