How to Keep a History of MQTT Data With Python

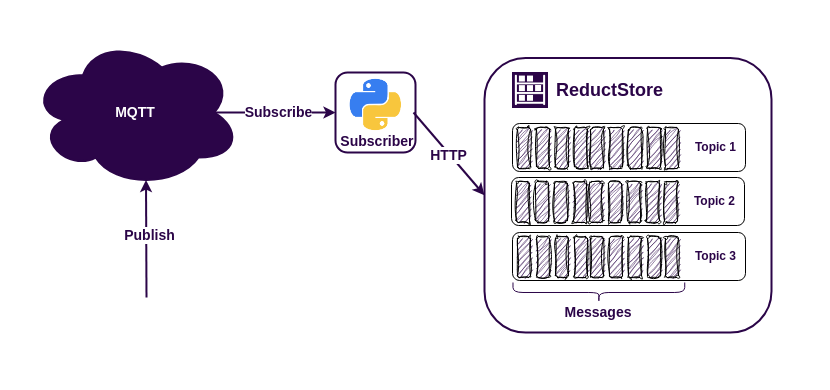

The MQTT protocol is an easy way to connect disparate data sources to applications, making it very popular for IoT (Internet of Things) applications. Some MQTT brokers can store messages for a while, even when the MQTT client is offline. However, sometimes you need to keep this data for a longer period of time. In these cases it's a good idea to use a time series database.

There are many databases available for storing MQTT data, but if you need to store a history of images, sensor data or protobuf messages, you might want to use ReductStore. This database is designed to store a lot of blob data and works well with IoT and edge computing.

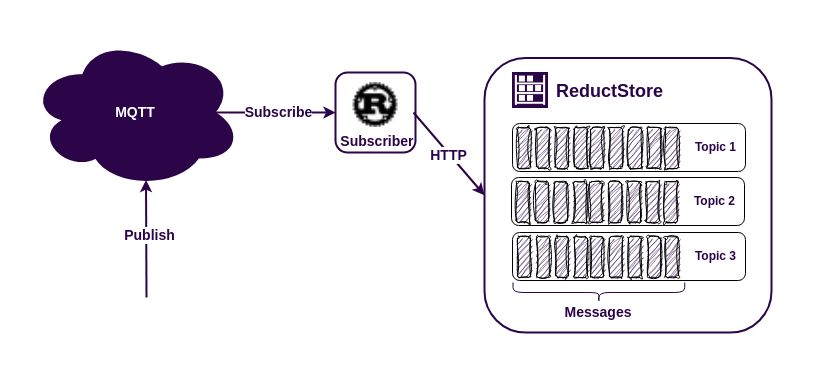

ReductStore has client SDKs (software development kits) for many programming languages. This means you can easily use it in your existing system. For this example, we'll use the Python SDK from ReductStore.

Let's create a simple MQTT application to see how it all works.